by Fadi Obeid, Principal Engineer & Tech Lead, Ad Products

If you’ve worked on or with real-time, you probably had to deal with event logging. At ShareThis, we’re all about real-time – we gather social signals as they happen, apply business logic to these signals, and finally transform these signals into complex ad-targeting rules.

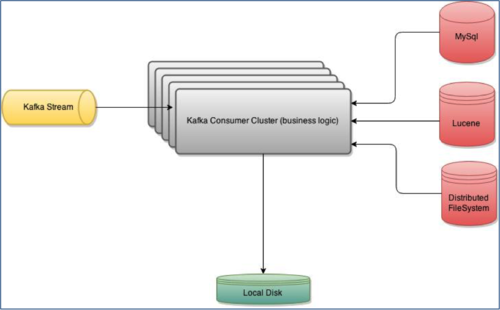

In this post we will cover the event logging at scale aspect. A simplified version of our pipeline looks like the following:

The Kafka stream is pumping around 800 million-1 billion events / day. Each event expands into an average of 12 virtual events, so the business logic inside the Kafka consumer cluster will have to process and evaluate 9-12 billion events per day, and logs qualifying events to disk.

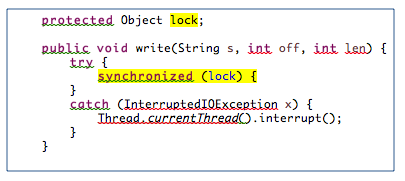

Initially, we had a 1×1 mapping of raw event to virtual event which was not a big deal since we had “enough machines”. The cluster capacity was 3 c3.xlarge instances and was backed by a homegrown synchronous logger.

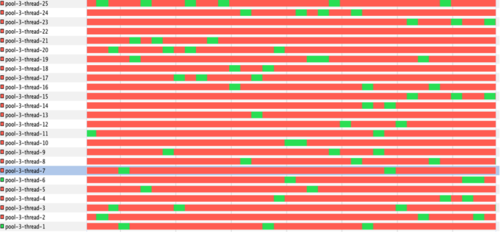

With time, the mapping increased – from 1×1 to 1×3 and so on. Each Kafka box in the cluster was running with 25 threads, and at load, the effect of locking the write operation as seen in this code snippet were crippling. The thread pool executor was falling behind in its ability to service incoming request, and the Kafka offset started lagging behind, sometimes up to a point where it could never catch up.

A profile of the running threads showed that the threads are blocked almost all the time, yikes!

We started looking at our options. Our list came down to:

- Add more machines

- Homegrown asynchronous logger

- Log4j2 asynchronous + disruptor + RandomAccessFile

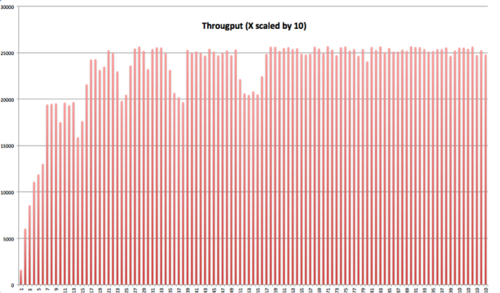

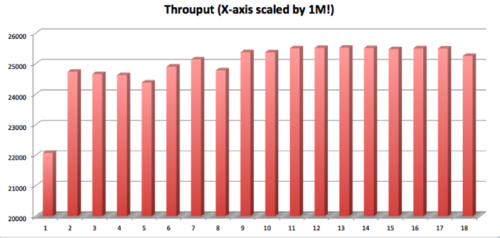

We went through pros and cons and landed on Log4j2. We benchmarked it, and the results were very impressive. For the purposes of this post, we’re showing two charts: the first shows the throughput at low loads, and second one shows throughput at high load. The nice part was how easy it was to wire it into the existing project, the support and the ample documentation.

In conclusion, we can safely say that our “log4j2 asynchronous + disruptor + RandomAccessFile” choice for logging was the right one. If you’re going through a similar process, we highly recommend profiling your application, laying out your options, and benchmarking it.